We use AI every day invisibly when we search on Google or let Apple pick music for us, but few of us trust it to do much more than that. According to a recent study of attitudes toward AI, most don’t think AI is quite ready to take over.

- 67% don’t want AI to make life or death decisions in war

- 64% don’t want AI as a jury in a trial

- 57% don’t want AI to fly airplanes

“When presented with scenarios that directly or indirectly affect them, Americans still trust humans over AI by a wide margin,” says a report by AI company Krista Software based on a survey of 1,000 American adults. “Americans aren’t yet willing to allow AI to make decisions or work tasks where the outcome will potentially affect them.”

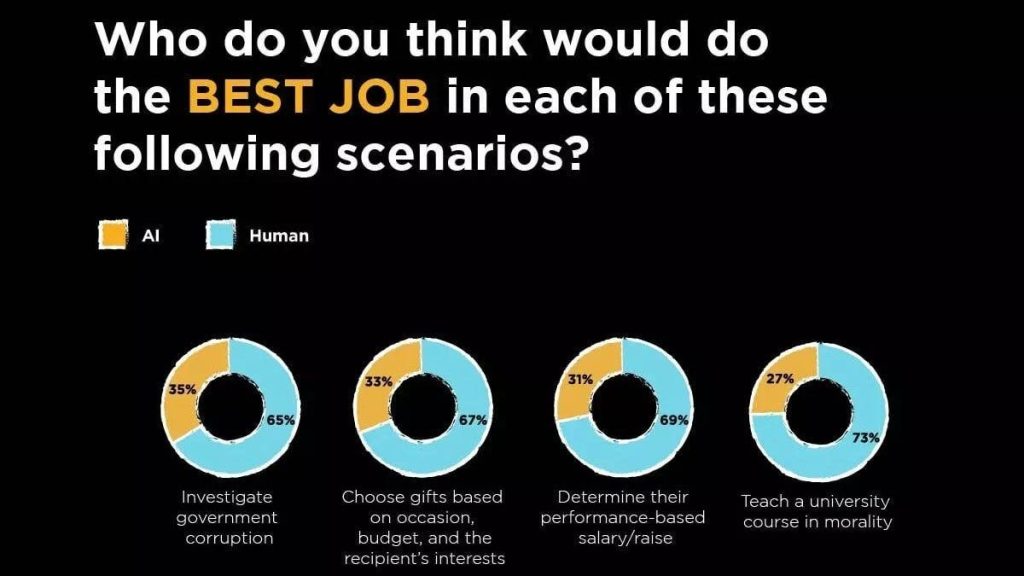

People also believe that humans will do a better job in a wide range of activities:

- investigating corruption (65%)

- choosing gifts (67%)

- deciding on a raise at work (69%)

- teaching a morality course (73%)

- administering medicine (73%)

- picking work outfits (75%)

- writing laws (76%)

- voting (79%)

- doing our jobs (86%)

The astonishing thing at this point is not really that two thirds of us don’t want AI to make life or death decisions in combat, but that a third of a thousand-person survey group are actually ready to grant that power to automated systems, and that 36% of American adults seem to be OK with AI being a juror at their trial.

One of the reasons for both the concern and — paradoxically — the lack of concern is a potential misunderstanding between automation and AI.

AI tries to imitate human intelligence and reasoning, where automation simply uses a very direct rules-based approach to make decisions and take action. The first is — somewhat like organic intelligence — subject to whims and errors and occasional outright hallucination. The second does exactly the specific tasks it is programmed to do, every single time. The result is that AI can make undesigned mistakes, whereas automation makes mistakes only when its designers fail to consider all the possible circumstances their systems will encounter.

So trusting AI to do difficult, complex and nuanced things without human oversight is likely to result in some good results — and increasingly good results over time as the AI improves — but also other nonsensical outcomes that no reasonable human would ever suggest. It’s likely that the impressive capabilities of automated production machines make some people overconfident in the capabilities of artificial intelligence as well.

“When it comes to AI, trust is something that must be earned, and part of that involves transparency and understanding,” said Michelsen. “When we responsibly integrate AI into our productivity apps, business processes, customer service centers and countless other applications, we can’t assume users and consumers will have a background in computer or data science.”

Interesting, men are more willing to grant that trust, according to the survey. 58% of men are OK with AI handing out medicine, but only 45% of women. 72% of men are fine with AI checking their colonoscopy, while only 57% of women want AI to check their mammograms. And perhaps most shocking, 75% of men would trust AI to their jobs, while only 48% of women do.

Read the full article here