Google Cloud’s recent enhancement to its serverless platform, Cloud Run, with the addition of NVIDIA L4 GPU support, is a significant advancement for AI developers. This move, which is still in preview, improves the platform’s ability to handle complex AI workloads, allowing developers to deploy, scale and optimize AI-powered applications more efficiently.

Cloud Run has already established itself as a go-to serverless platform for developers due to its simplicity, fast autoscaling and pay-per-use pricing model. These features allow for rapid deployment and scaling of applications without the need to manage servers. With the integration of NVIDIA L4 GPUs, the platform’s capabilities are now extended to support real-time AI inference, a crucial requirement for many emerging generative AI applications. The NVIDIA L4 GPU is designed for inference at scale, offering up to 120 times the video performance compared to CPUs and 2.7 times the performance for generative AI tasks compared to previous generations of GPUs.

This integration is particularly beneficial for deploying lightweight generative AI models and small language models such as Google’s Gemma and Meta’s Llama. These models are popular for tasks like chatbot creation, real-time document summarization and various other AI-driven functions. For example, Google’s Gemma models (2B and 7B) and Meta’s Llama models (8B) can be used to develop highly responsive and scalable AI applications. The introduction of NVIDIA L4 GPUs in Cloud Run ensures that these models can run efficiently, even during peak traffic, without compromising performance.

Deploying AI models on Cloud Run with NVIDIA L4 GPUs is designed to be a seamless process. Developers can create container images that include the necessary dependencies, such as NVIDIA GPU drivers and the AI model itself. Once the container is built and pushed to a container registry, it can be deployed on Cloud Run with GPU support enabled. This process allows businesses to take full advantage of Cloud Run’s scalability and NVIDIA’s powerful GPUs without the need for specialized infrastructure management.

The platform’s flexibility extends to supporting various Google Cloud services, including Google Kubernetes Engine and Google Compute Engine, giving developers the choice of the level of abstraction they need for building and deploying AI-enabled applications. This flexibility is critical for businesses looking to tailor their AI deployments to specific needs while ensuring that they can scale efficiently as demand fluctuates.

The enhanced capabilities of Cloud Run with NVIDIA L4 GPUs extend beyond just AI inference. They also enable a variety of other compute-intensive tasks such as on-demand image recognition, video transcoding, streaming and 3D rendering. This makes Cloud Run a versatile platform that can cater to a wide range of applications, from AI-driven chatbots to media processing services. The flexibility offered by the platform, coupled with its ability to scale down to zero during inactivity, ensures that businesses can optimize costs while maintaining high performance during active usage.

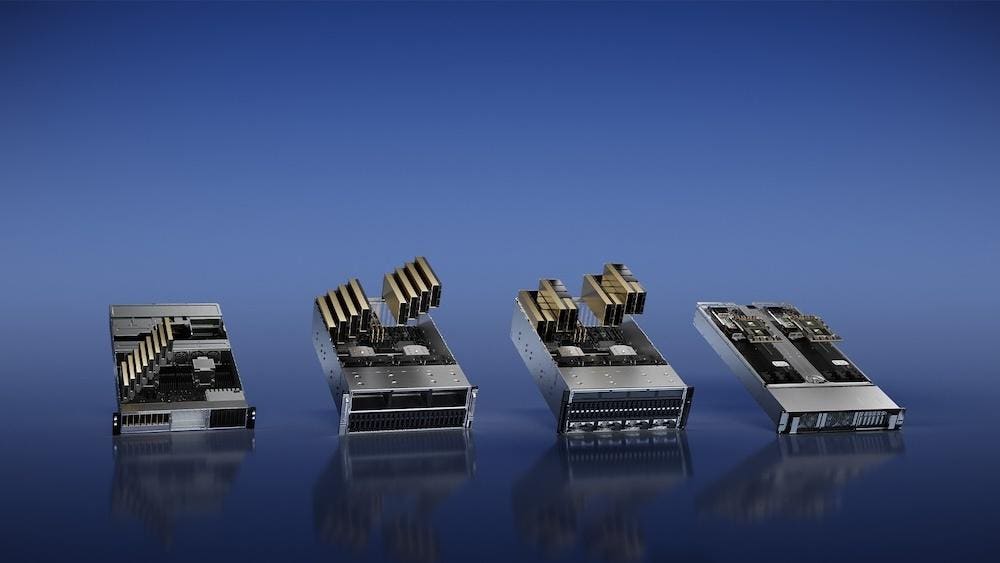

A key aspect of this development is the NVIDIA-Google Cloud partnership, which aims to provide advanced AI capabilities across various layers of the AI stack. This partnership includes the provision of Google Cloud A3 VMs powered by NVIDIA H100 GPUs, which offer significantly faster training times and improved networking bandwidth compared to previous generations. Additionally, NVIDIA DGX Cloud, a software and AI supercomputing solution, is available to customers directly through their web browsers. This allows businesses to run large-scale AI training workloads with ease.

NVIDIA AI Enterprise, which is available on Google Cloud Marketplace, provides a secure, cloud-native platform for developing and deploying enterprise-ready AI applications. This platform simplifies the process of integrating AI into business operations, making it easier for companies to harness the power of AI without needing extensive in-house expertise.

Several companies are already benefiting from the integration of NVIDIA GPUs into Google Cloud Run. For instance, L’Oréal, a leader in the beauty industry, is using this technology to power its real-time AI inference applications. The company has reported that Cloud Run’s GPU support has significantly enhanced its ability to provide fast, accurate and efficient results to its customers, particularly in time-sensitive applications.

Another example is Writer, an AI writing platform that has seen substantial improvements in its model inference performance while reducing hosting costs by 15%. This has been made possible through Google Cloud’s AI Hypercomputer architecture, which leverages NVIDIA GPUs to optimize performance and cost-efficiency.

The addition of NVIDIA L4 GPU support to Google Cloud Run represents a major milestone in cloud-based AI development and serverless AI inference. By combining the ease of use and scalability of Cloud Run with the powerful performance of NVIDIA GPUs, Google Cloud is offering developers and businesses the tools they need to build, deploy and scale AI applications.

Read the full article here